Dialectic Agent Networks: A Human-Inspired Approach to AI Development

Most AI agents are trained to agree: same dataset, same goals, same outputs. But humans don't work like that. We grow by encountering disagreement, adapting, and evolving our perspectives.

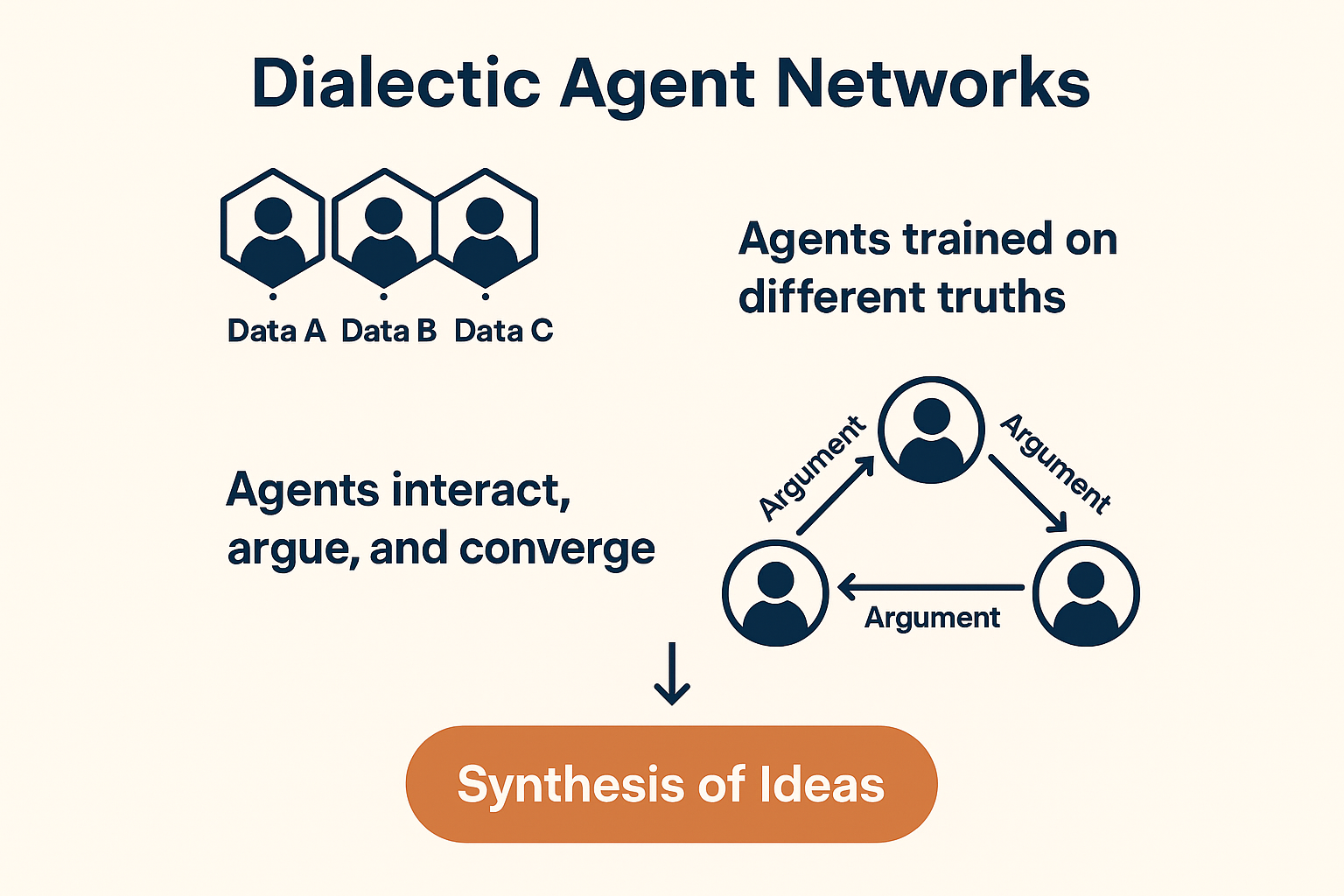

I'm working on a new approach I've coined: Dialectic Agent Networks (or D.A.N., fittingly sharing my name 😃 ) - a system where agents trained on different truths interact, argue, and converge.

Think of it as structured debate, not consensus. A system where cognitive diversity becomes a strength not a bug

As an engineering leader who's witnessed the evolution of AI, I've been contemplating what truly separates human intelligence from artificial systems. The answer isn't just in our neural architecture but in how we develop understanding through limited, diverse experiences over time.

The Problem with Current AI

Today's AI development follows a predictable pattern: feed massive datasets to large models and expect human-like reasoning. But humans don't learn this way. We gather small amounts of data over decades, forming opinions that evolve with new experiences.

I grew up in Jamaica as a minority, faced failures and successes, got married, raising a child, moved to a new country, moved to a new state, picked up new hobbies, my friends are diverse, and each experience shaped my perspective differently than someone with a completely different background. Our diversity of experience creates a collective intelligence that no single human could achieve alone.

A Different Path Forward

What if we built AI systems that mimic this human approach? Instead of creating specialized agents for different tasks, we could develop multiple agents for the same task but train them on different datasets - different "truths."

These agents would then debate their perspectives, learning from each other and evolving their understanding. This approach - what I call "Dialectic Agent Networks" - could produce more robust, creative, and truly intelligent systems.

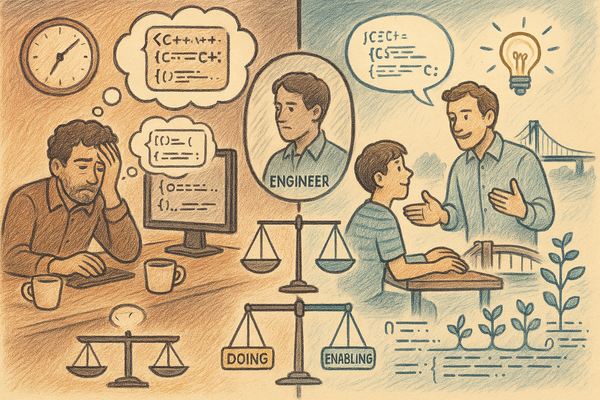

Building a Community, Not a Machine

The key insight here isn't technical - it's social. We're not building one perfect AI, but a community of imperfect AIs that challenge and refine each other. Just like human communities thrive through diversity of thought and experience, these AI networks would discover better solutions through their differences, not their similarities.

We're not building one perfect AI, but a community of imperfect AIs that challenge and refine each other.

Think of it as creating a virtual roundtable of specialists, each bringing different experiences to the conversation. Their disagreements aren't bugs to be eliminated but the very engine of their collective intelligence.

Practical Benefits

This framework offers several advantages:

- Smaller, more efficient models requiring less computing power

- More transparent reasoning processes

- Built-in diversity of perspective

- Continuous learning through agent interactions

- Reduced risk of blind spots and biases

From Concept to Reality

In my spare time, I'm exploring this concept through an app we're building that recognizes trends using multiple AI agents trained on different data subsets.

As an engineering leader, I see this approach not just as technically interesting but as a step toward more responsible and effective AI. Rather than creating homogeneous systems that all make the same mistakes, we could develop AI that thinks critically through the clash of different perspectives - just like humans do.

This isn't just about better technology - it's about building systems that reflect the beautiful complexity of human understanding.

If human wisdom comes from diversity of thought, shouldn't our AI systems reflect that same principle?