Do We Still Need Human-Readable Code in an AI Future?

Do We Still Need Human-Readable Code in an AI Future?

For 70+ years we’ve climbed the readability ladder—from raw opcodes to assembly, to C, to Python and TypeScript. Every step was a concession to human limits: short-term memory, syntax fatigue, cognitive load.

But what happens when the authors aren’t human anymore?

Industry leaders at Anthropic, Microsoft, Google, and Meta keep saying AI will produce most code in the future. This isn’t some distant reality:

- 63% of developers already use AI tools daily (Stack Overflow, 2024)

- Teams accept roughly one-third of Copilot’s suggestions

- Reviewers are 5% more likely to approve AI-assisted code

Machines aren’t sidekicks anymore—they’re becoming primary authors.

Readability Was Always a Favor to Ourselves

// We created promises because callbacks were too hard for *our* brains

getData()

.then(processData)

.then(displayResult);A CPU never got confused by callback pyramids—we did.

- Async/await – to make promise chains easier to follow

- Type inference – to reduce repetitive annotations

- String interpolation – because concatenation was too messy

- Tabs vs. spaces, bracket placement, linting rules – rituals for humans, not machines

All of it designed to make life easier for us, not the computer.

If AI Writes 100% of Code, Why Not Drop the Training Wheels?

If a model can emit provably correct machine code in milliseconds, the traditional source file becomes just a log entry. We might hand the AI a spec, some tests, and performance requirements—and receive a compiled binary in return. Inputs, outputs, guarantees. Done.

That’s a radical thought: our carefully crafted languages could become mere compatibility layers for legacy systems. Future stacks might look more like compiler IR or WASM than Python scripts. The real currency? Behavioral contracts, not for-loops.

So if we’re no longer the ones writing code, why are we still designing everything for human readers?

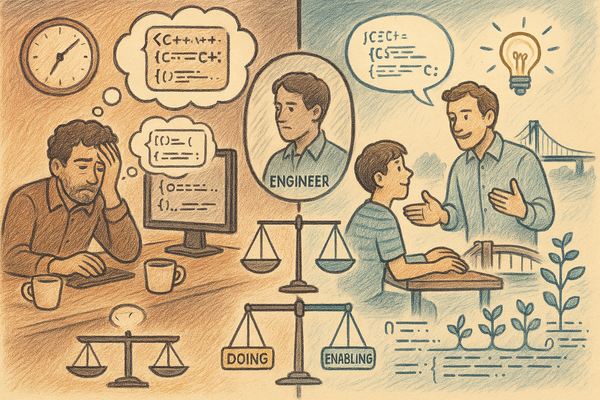

Why We May Still Care (At Least for Now)

- Security & Audit – Regulators can’t approve black boxes. Human-readable diffs are still the best way to spot sneaky data leaks or logic bombs.

- Debugging & Drift – Even if AI is 99% right, that 1% matters. When business logic changes or failures emerge, we’ll need to trace why the AI did what it did.

- Long-Term Maintenance – Prompts vanish, models evolve, rules shift. Human-readable code can serve as the Rosetta Stone when everything else moves on.

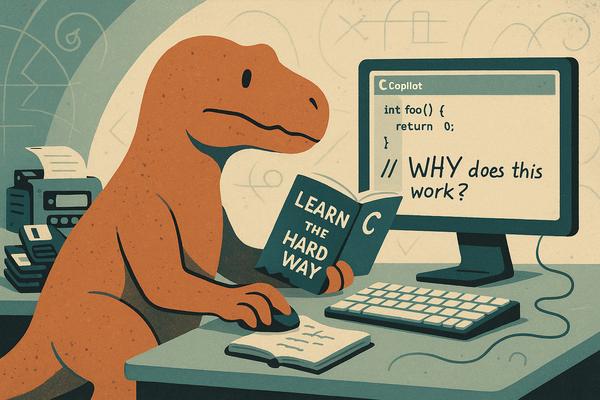

- Education & Culture – Reading code is how we learn to think computationally. If developers grow up with only opaque binaries, we lose oversight and understanding.

In that world, “readability” shifts from source code to specs and summaries. We still communicate intent—but not through curly braces.

So, Are Programming Languages Already Fossils?

Maybe. Or maybe they’re the scaffolding we still need while AI earns our trust.

Copilot’s acceptance rate is around 30%. Impressive—but humans still veto two-thirds of suggestions. And a single subtle bug or security flaw can cost far more than all the time we saved.

The trend is clear though: as AI quality rises, the ROI of hand-crafting syntax falls.

We’re not debating tabs vs. spaces anymore—we’re debating transparency vs. throughput.

🤔 When AI out-programs us completely, do we keep languages around for nostalgia, compliance, and teaching?

Or do we aim straight for the silicon and let human readability fade into history?